Dr. Leo

Embracing the Future of AGI!

About Me

I'm Leo, an AI infrastructure architect focused on pushing the limits of modern hardware scalability—bridging GPUs, interconnects, and distributed systems to unlock their full potential. My work isn't just about performance tuning; it's about understanding the workarounds, bottlenecks, and structural shifts that define this technological era. Beyond engineering, I'm personally drawn to the deeper, human questions behind these changes—how they reshape not only computation, but our relationship to intelligence itself.

Projects

-

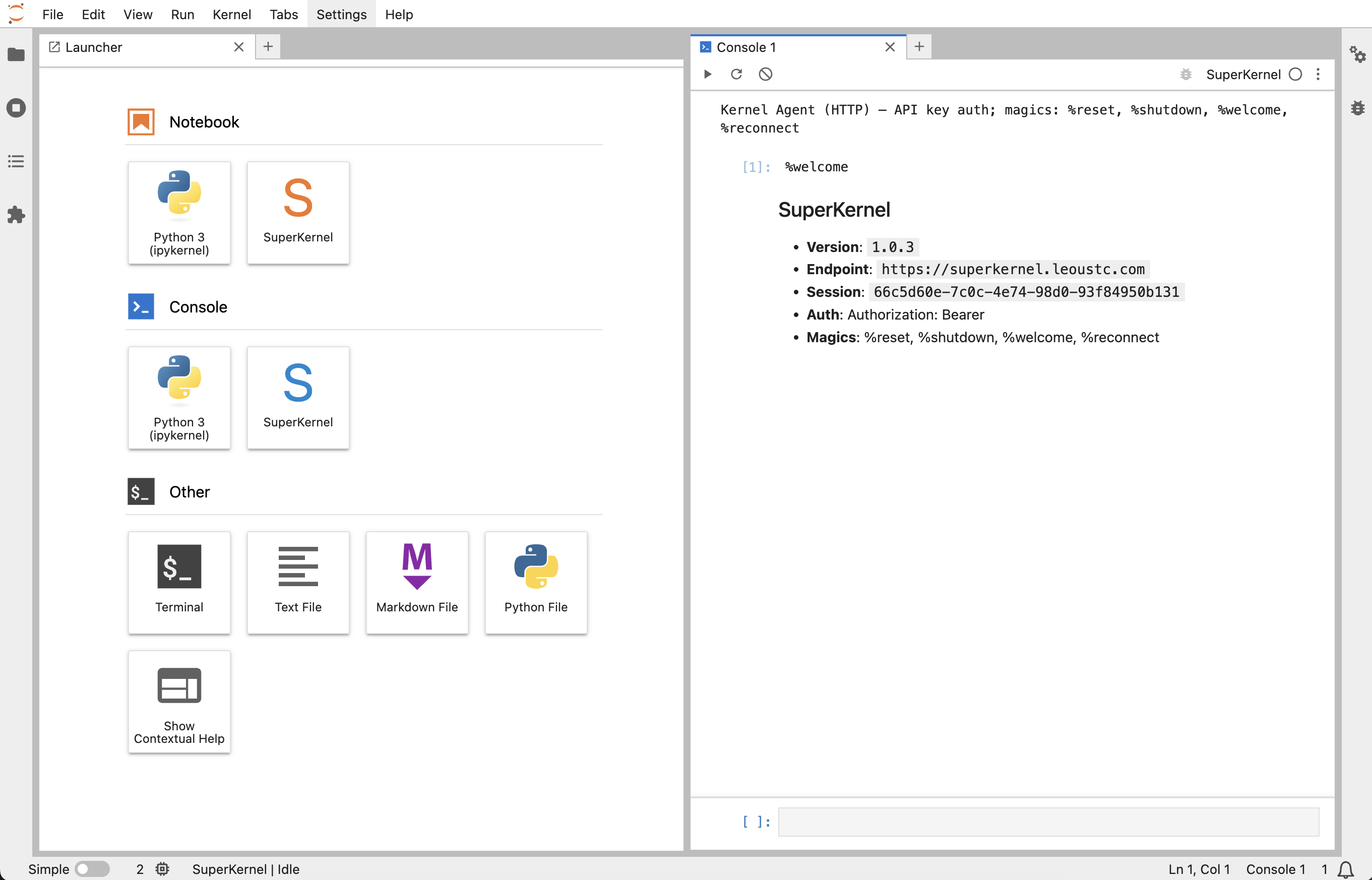

Super Kernel: a next-generation Jupyter kernel that unifies scale-up and scale-out networking, enabling seamless execution of notebook cells across thousands of GPUs and nodes. Designed to eliminate AI infrastructure complexity and deliver boundless scalability from your notebook.

- Jupyter Kernel Agent: a lightweight Jupyter kernel agent that lets you run code remotely by forwarding notebook cells to any HTTP endpoint, making it easy to connect Jupyter to clusters, GPU servers, or cloud backends with minimal setup.

- Jupyter Remote Kernel: a CLI tool for launching and managing remote Jupyter kernels over SSH port forwarding. Supports remote workspace mounting via sshfs, seamless local/remote sync, and robust error recovery.

Four Layers of AI Infrastructure

1. GPU & NVLink

Modern GPUs provide immense compute power, but their true value emerges when linked via NVLink into coherent clusters. This high-bandwidth, low-latency interconnect transforms isolated devices into a unified compute fabric—critical for training large-scale models like LLMs. From my perspective, this trend marks a fundamental shift in computing: from linear pipelines to two-dimensional, matrix-like computation, where performance depends not just on raw power, but on the architecture of connections themselves.

2. RDMA & Networking

Traditional networking protocols like TCP/IP were never designed for the demands of modern AI. RDMA fundamentally redefines this layer by enabling memory-to-memory transfers without CPU involvement—achieving microsecond latencies and full link saturation at 200 Gbps+. But more than a performance hack, RDMA represents a shift toward spatial communication models. In two-dimensional computing, data is no longer passed linearly, but flows across a fabric—emphasizing topology, synchronization, and bandwidth symmetry as first-class design principles.

3. Kubernetes & Scheduling

Kubernetes brought scale to the cloud, but AI workloads require more than generic orchestration—they demand hardware-aware, interconnect-sensitive scheduling. My work pushes K8s toward a topology-driven model, where GPU placement, NVLink awareness, and RDMA path optimization are tightly integrated. This aligns with the broader move from time-based to space-based computation: where resource geometry and inter-node relationships directly impact performance and scalability.

4. AI-Native Application Stack

AI applications today aren’t just services—they're dynamic, emergent systems. LLM inference, multimodal fusion, token streaming—all rely on real-time orchestration of compute, memory, and network. To support this, infrastructure must become more reactive, adaptive, and tensor-native. In my view, this signals a new layer of computing logic—one rooted in matrix structures and flow fields, where software doesn't just run on hardware, it resonates with it.

Books (coming soon)

- 📘 Beyond Algorithms — Explores the structural and philosophical foundations of intelligence through AI, networks, and quantum theory.

- 📗 Behind Algorithms — A technical and historical tour from CUDA cores to fabric-native GPU clusters.

- 📙 Between Algorithms — Reflects on how AI infrastructure reshapes society, cognition, and our understanding of life.

Education

- Ph.D., University of Wisconsin - Madison

- B.S., University of Science and Technology of China

Professional Affiliations

- Voting member, Linux Foundation Edge and Akraino Project (2022, 2023)

- Vice Director, Film Advanced Technology Committee, CSMPTE (2018)

- Industry Professor, Jiangnan University (2017–2021)

Selected Patents

| No. | Patent ID | Title |

|---|---|---|

| 21 | US20120290696 | Longest Prefix Matching of Variable-Sized Hierarchical Names by Treelets |

| 20 | CN20150605 | File Transfer Based on Named Data Networking Caching Algorithm |

| 19 | CN119179440A | Distributed Storage Based on Centralized Metadata |

| 18 | CN119336686A | Expanding Base Address Register Space in PCIe Devices |

| 17 | CN114827151A | Heterogenous Clustered Devices via PCIe CXL and UCIe |

| 16 | CN114745325A | MAC in MAC Network Encoding via PCIe, CXL, UCIe |

| 15 | CN110891081A | Packet Routing and Broadcasting Method |

| 14 | CN20150605CN | Vehicular Networking with Content-Centric Networking |

| 13 | CN111027396A | Assisted Driving System and Cloud Server |

| 12 | CN110929087A | Audio Classification Method and Device |

| 11 | CN106708749A | Fast Searching Based on Fractional Algorithms |

| 10 | CN109688204A | File Download Using Named Data Networking |

| 9 | CN109448684A | Intelligent Music Composition |

| 8 | CN111209098A | Intelligent Rendering Scheduling System |

| 7 | CN110955515A | File Processing for Electronic Devices |

| 6 | CN111178151A | Micro-Expression Recognition Based on AI |

| 5 | CN106095996B | Text and Content Classification System |

| 4 | CN110944034A | Web-Based Resumable Transmission Protocol |

| 3 | CN111125045A | Lightweight ETL Processing Platform |

| 2 | 2017211770803 | Portable Accelerated Mobile Video Transmission |

| 1 | CN107819704A | Scalable Wireless Media App for Edge Computing |

Contact

Feel free to connect via [email protected] or on GitHub.